Development of a Smart Sleeve Control Mechanism for Active Assisted Living

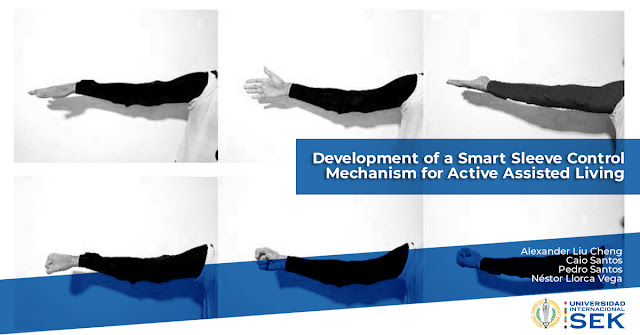

This paper describes the development of a Smart Sleeve control mechanism for Active

Assisted Living. The Smart Sleeve is a physically worn sleeve that extends the user’s control

capability within his/her intelligent built-environment by enabling (1) direct actuation and/or (2)

teleoperation via a virtual interface. With respect to the first, the user may point his/her sleeve-

wearing arm towards a door or a window and actuate an opening or a shutting; or towards a

light and effect its turning on and off as well as regulating its intensity; or even towards a

particular region to initiate ventilation of it. With respect to the second, the user may engage

actuations in systems beyond his/her field of vision by interacting with a virtual representation

of the intelligent built-environment projected on any screen or surface. For example, the user

is able to shut the kitchen’s door from his/her bedroom by extending the Smart Sleeve

towards the door of a virtual representation of the kitchen projected (via a standard projector

and/or television monitor) on the bedroom’s wall. In both cases, the Smart Sleeve recognizes

the object which the user wishes to engage with—whether in the real or the virtual worlds—(a)

by detecting the orientation of the extended arm via an Accelerometer / Gyroscope /

Magnetometer sensor; and (b) by detecting a specific forearm muscle contraction via

Electromyography caused by the closing and opening of the fist, which serves to select the

object. Once the object is identified and selected with said muscle contraction, subsequent

arm gestures effect a variety of possible actuations for a given object (e.g., a window may be

opened / shut or dragged to differing degrees or aperture; a light’s intensity may be increased

or decreased, etc.). In cases of ambiguous selections, the user my use voice-commands to

explicitly identify the desired object of selection. Furthermore, due to this voice-based

recognition mechanism, which recognizes both spoken commands as well the identity of the

speakers, different rights to actuation may be assigned to different users. Read more here.

- Alexander Liu Cheng

- Caio Santos

- Pedro Santos

- Néstor Llorca Vega

Comentarios

Publicar un comentario